LLMs Struggle Applying Grammatical Categories

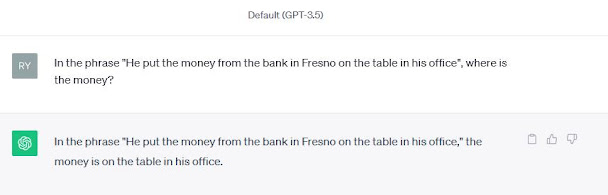

ChatGPT isn’t actually dismal when it comes to answering factual questions about preposition-dense sentences, but when I began prodding it more directly about the prepositions in the sentence (below), it made some very basic mistakes in its responses before eventually getting caught in a loop and self-destructing. Its output is functional, but does not reflect any real grasp of the grammatical concepts it is working with.

Counting the prepositions in a sentence should be a simple task and its initial mistake of misclassifying “his” is utterly bizarre.

This was in a sense an unfair question, as I cannot come up

with a valid response myself and suspect there is none, but that is beside the

point. The option was open to it to respond that it was not able to put

together a valid solution, but for some reason it chose to press onward introducing error after error until it crashed.

It isn’t by default aware of the role that any particular word is playing in the sentence it is constructing and it is plagued by a strange sort of inattention. It can tell you the definition of a preposition and a list of the words that are considered prepositions, but when prompted to scan a sentence for prepositions, it may overclassify or overlook instances of them. Overlooking prepositions seems to be one of the core problems in the replacement task I set for it: it appeared to identify the first preposition in the sentence and stop the search there. It then rewrote the sentence without that word, without checking whether the new sentence contained a preposition.

I ran the following set of examples to try to clarify what specifically is going wrong when ChatGPT attempts to apply word categories to a sentence:

It did in all cases identify and replace instances of the word category I indicated, but it also consistently overextended its replacement to include words outside of that category. I tried repeating the same prompts, and it is interesting to note that it produced different output with every repetition, though the overextension was always present.

So what are we to conclude from all of this? An LLM can tell you all about grammatical categories and the output it produces abides by grammatical rules, but it cannot actually apply that knowledge to its own output. This is because it does not actually possess that knowledge in any deployable form. It can assemble it for a user to view given the right prompt, but this requires no understanding on its own part. The output it produces is grammatical not because it has any grasp of grammatical rules, but because grammatical sentences are more common than ungrammatical ones in the data it has been trained on.

To apply the grammatical category of “noun” or “preposition” when scanning words in a sentence is not a difficult task for anyone with a basic grasp of the language. And that is precisely the point—an LLM does not have even a basic grasp of language. Its apparent competence is a statistically-achieved illusion that can readily shatter under pressure.

Comments

Post a Comment