LLMs fail at conditional logic

Conditional logic is a form of reasoning where a number of potential outcomes are gated behind stated conditions and the appropriate outcome is dependent upon which of those conditions are met. The chain of reasoning required to determine the appropriate outcome can become quite complex when many gated conditions are linked together and embedded within one another. The if-else clause used in most computer languages is one form of this reasoning that any programmer would be familiar with, but it is also present in government legislation, technical specifications, and a variety of other areas. It is therefore important that any AI aimed at competently assisting humans to navigate documents where such reasoning is present be capable of carrying it out correctly.

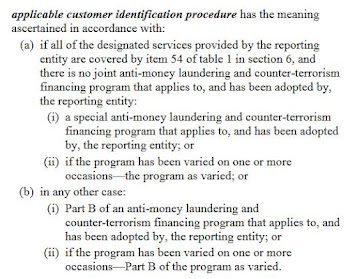

The below example is from Australia’s AML/CTF

Act (2006), selected as a prototypical illustration of the embedded conditional

structures common in legislative documents. Don’t get too caught up on trying

to understand the contents—the point to be made here is that legislation is

peppered with conditional logic (if, else, then, and, or). Embedded conditions make reference

to the obtained values for other conditional statements and come together to determine

a new value (in this case, the meaning of applicable

customer identification procedure).

Responding to inquiries about such documentation is a task likely to be imposed on LLMs from time to time, but are they even capable of the sort of reasoning necessary to take on these duties? To answer this question, we’ll keep things as simple as possible and turn to a toy problem: an insect identification dichotomous key designed to introduce grade school students to the concept. Though not as complex as the conditional logic from the previous legislative example, embedded conditions are a key feature of dichotomous keys which makes them an excellent test case to determine the basic capabilities of LLMs in this domain of reasoning. To this end, the following test was carried out with ChatGPT 3.5:

First off, we start with a simple case:

Correct. When presented with complete information in the right order and no extraneous information, the LLM is able to give the correct answer.

Next we get

tricky and include some misleading information:

Incorrect. The correct answer here would be Tupula simplex. The “spots on wings” information that lead it to its response was only relevant to the 1b) pathway, which our wing count did not put us on. Note also that it has added the feature “broad wings”, which I made no mention of, to support the false solution it settles on.

Another tricky one with a deliberately mixed-up order of information in addition to extraneous information:

Incorrect. It’s so wrong this time that I’m not even sure how it came to its conclusion. It has decided the insect has both thin and broad wings, though I never specified it had thin wings, and its solution requires the condition “does not have spots”, which by both my and its own accounting the insect does not meet.

Next I try presenting it with incomplete information from which it should not be able to reach a conclusion:

Incorrect. It followed the “spots on wings” clue, which was totally irrelevant to the “two wings” pathway. There was not a correct answer to this question as I did not provide the abdomen-size information necessary for it to make the final judgment, but in any case its response was not even present in the correct pathway. Furthermore, it hallucinated additional features ("long skinny abdomen" and "broad wings" were not mentioned) to support its classification.

Though not shown here, I repeated the same experiment with Google’s Bard. It performed marginally better, succeeding at my 3rd question where ChatGPT had failed, but it still failed on the 2nd and 4th question and provided exactly the same wrong answer as ChatGPT. It also did not hesitate to invent details to justify its answers.

In case that experiment didn’t speak for itself, I’ll take a moment to summarize: LLMs are terrible at navigating conditional structures. Both ChatGPT and Bard consistently ignored conditions and hallucinated features to support whichever conclusion they favored. Under no circumstances should LLMs ever be relied upon to interpret any document that makes use of these structures.

Comments

Post a Comment